While working on the next iteration of my SSIS ETL Framework, I’ve discovered that the capabilities of the out-of-the-box Execute Package task are quite lacking. Luckily, with SQL Server 2012, it has never been easier to execute SSIS packages programmatically. In this post, we will look at two different options for executing SSIS packages from .Net code first by calling stored procedures found in the SSISDB catalog and then by using the provided SSIS API.

SSIS Catalog Stored Procedures

One of the coolest features of the SSIS Catalog in SQL Server 2012 is the ability to completely control your SSIS deployment through stored procedures. Executing a package deployed to the catalog is no different.

In its simplest form, to kick-off an run a SSIS Package there are two procedures to call: [catalog].[create_execution] and [catalog].[start_execution].

To start, you must first call create execution which includes the reference to the project, folder and package. Additionally, a reference to an environment can be included as well as the option to run the package with the 32-bit runtime. The procedure after checking whether the caller is authorized to run the package, creates the execution shell and returns an identifier for the execution. The execution identifier is important and is used to subsequently start the execution and later as we will see, set parameter configurations.

In the example below, we create an execution for DemoPackage.dtsx which is found in the Blog folder and ETL Demo project. We use a variable (@executionID) to capture the identifier for the execution.

.csharpcode, .csharpcode pre

{

font-size: small;

color: black;

font-family: consolas, “Courier New”, courier, monospace;

background-color: #ffffff;

/*white-space: pre;*/

}

.csharpcode pre { margin: 0em; }

.csharpcode .rem { color: #008000; }

.csharpcode .kwrd { color: #0000ff; }

.csharpcode .str { color: #006080; }

.csharpcode .op { color: #0000c0; }

.csharpcode .preproc { color: #cc6633; }

.csharpcode .asp { background-color: #ffff00; }

.csharpcode .html { color: #800000; }

.csharpcode .attr { color: #ff0000; }

.csharpcode .alt

{

background-color: #f4f4f4;

width: 100%;

margin: 0em;

}

.csharpcode .lnum { color: #606060; }

DECLARE @executionID BIGINT

EXEC [catalog].[create_execution]

@folder_name=N'Blog',

@project_name=N'ETL Demo',

@package_name=N'DemoPackage.dtsx',

@reference_id=NULL,

@use32bitruntime=FALSE,

@execution_id=@executionID OUTPUT

Once the execution is created, we can asynchronously kick it off by calling the start_execution procedure. This as seen below accepts a single argument which is the execution id from above.

EXEC [catalog].[start_execution] @executionID

After the SSIS package execution has started you can monitor its status by querying the [internal].[operations] table. This table contains all the execution information included when the execution was created, started, completed, who created it and most importantly the status.

SELECT [STATUS]

FROM [SSISDB].[internal].[operations]

WHERE operation_id = @executionID

The status column is represented as integer and can be decoded using the table below.

| Code |

Description |

| 1 |

Created |

| 2 |

Running |

| 3 |

Cancelled |

| 4 |

Failed |

| 5 |

Pending |

| 6 |

Ended Unexpectedly |

| 7 |

Success |

| 8 |

Stopping |

| 9 |

Complete |

After you’ve gotten your package to run, one of the next logic questions is how can I configure it or set parameter values prior to execution? Like before, a call to another stored procedure, [catalog].[set_execution_parameter_value], allows you to specify the value for a package or project parameter for a specified execution.

EXEC [catalog].[set_execution_parameter_value]

@executionID,

@object_type=30,

@parameter_name=N'MyPkgParam',

@parameter_value='Test Value'

In the example above, we pass in our execution identifier, the parameter name and the value we wish to specify. The object type for this procedure will take one of three values:

- 20 – Project Parameter

- 30 – Package Parameter

- 50 – Environment Parameter

Taken together, these three stored procedures will allow us to easily and using basic database calls, programmatically call our packages stored in the SSIS Catalog.

SSIS API

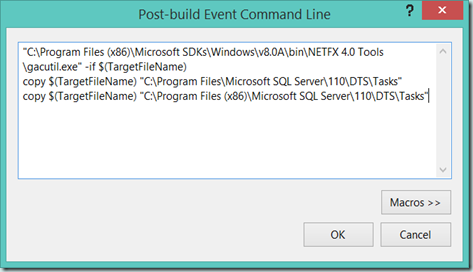

If calling T-SQL stored procedures directly are not quite your thing, a second option for executing SSIS packages programmatically exists in the form of the SSIS API. To use the SSIS API, we need to reference a couple of namespaces:

- Microsoft.SqlServer.Management.IntegrationServices: found in the GAC (Global Assembly Cache)

- Microsoft.SqlServer.Management.Smo: found at C:\Program Files\Microsoft SQL Server\110\SDK\Assemblies\Microsoft.SqlServer.Management.Sdk.Sfc.dll

It should be noted at this point that the API calls are simply using the stored procedures discussed previously. With the namespaces in place, we have everything we need to get started and can begin by reviewing the hierarchy of objects that must be navigated for us to retrieve a reference to our package.

The whole process starts with a reference to a Server. This can be created by either using the Server SMO object or by passing in a connection string to the Integration Services API. From the Server, we must find the Catalog. Currently, there can only be a single catalog on each server and its name will always be SSISDB. In the Catalog, we must next find out Folder, which contains our Project that ultimately contains the Package.

Our package is implemented as a PackageInfo object within the API. From this object, we can call the execute method which accepts arguments much like the preceding stored procedures (32-bit runtime and environment reference) as well as collections for passing in execution parameter values. The execute method returns the identifier of the execution that can subsequently be used to monitor the execution.

In the sample code below, I show one way of doing this.

Operation.ServerOperationStatus status = Operation.ServerOperationStatus.Created;

var server = new Server("localhost");

var ssis = new IntegrationServices(server);

if (ssis.Catalogs.Contains("SSISDB"))

{

var catalog = ssis.Catalogs["SSISDB"];

if (catalog.Folders.Contains("Blog"))

{

var folder = catalog.Folders["Blog"];

if (folder.Projects.Contains("ETL Demo"))

{

var project = folder.Projects["ETL Demo"];

if (project.Packages.Contains("DemoPackage.dtsx"))

{

var package = project.Packages["DemoPackage.dtsx"];

long executionId = package.Execute(false, null, null, null);

var execution = catalog.Executions[executionId];

while (!execution.Completed)

{

execution.Refresh();

Thread.Sleep(1000);

}

return execution.Status;

}

}

}

}

return status;

There are a couple of things to point out in this example before wrapping up. First, the Execute method happens asynchronously. To make this code run synchronously, I use polling to wait on the package to finish execution so that the execution status can be returned. Second, the Execute method accepts a collection of PackageInfo.ExecutionValueParameterSet objects that allow you to configure parameter values for your execution. This value was passed as null for the example but could have easily been set as seen below.

Collection<PackageInfo.ExecutionValueParameterSet> executionParams = new Collection<PackageInfo.ExecutionValueParameterSet>(); executionParams.Add(new PackageInfo.ExecutionValueParameterSet() { ParameterName = “MyParam”, ParameterValue = “TestValue”, ObjectType = 30 };

…

long executionId = package.Execute(false, null, executionParams, null);

Wrap-Up

In this post, we looked at different options that are available to execute SSIS package programmatic from the SQL Server 2012 catalog. This hardly scratches the surface of the programmatic possibilities of the catalog but in my case allowed me to work around a limitations of the out-of-the-box Execute package task.

Till next time!

Chris